Home Knowledge Base Really augmenting AR

Really augmenting AR

19th November 2020

By now, we’re all well-versed in what augmented reality can do. It lets us place virtual objects in real environments and interact with, and manipulate, them through a handheld device such as a smartphone, tablet or even headset. Over the last few years, since the ubiquitous Pokemon Go launched AR into the mainstream, the capabilities of augmented reality have become more and more sophisticated. But there are still limitations – and visual quality is one of them. That’s why we’ve been experimenting with ways to augment the visuals assets in AR experiences without overloading the devices used to view them.

Striving for more

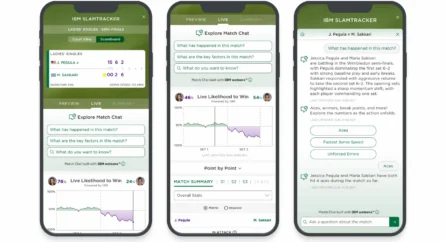

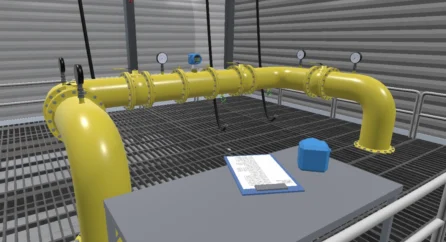

A little while back, Nvidia shared a demo for their 5G-based Cloud XR where they streamed highly detailed CAD models to VR and AR devices. What was striking was seeing AR being able to support the level of detail in a CAD model. At Infinite Form, we create AR experiences for a range of uses and invariably come up against the same balancing act: how to get the best possible visual quality for the assets while keeping the overall size of the app down. It’s always a bit of a compromise, although AR platforms are evolving all the time. However, we wanted to push the boundaries a bit further to find a way of building AR experiences with high-fidelity, PC-grade graphics ourselves. So we did.

Pushing the tech boundaries

We devised a way of using video streaming to enable a central PC to stream high-end assets to a handheld device, where they can be viewed in real-time in AR – essentially taking the job of rendering 3D assets away from the device and giving it to a much more powerful computer instead. In a nutshell, the phone sends positional information to the PC, which then renders the frame in a game engine (we use Unity but it’s equally doable in Unreal) and then that frame data is encoded into a video stream, which is transmitted back to the phone and composited against the phone’s camera video feed.

What does this mean?

This means that for any situation where you need a desktop-class GPU to get the best results, you can now do it in AR on a relatively inexpensive mobile phone or tablet, given a good WiFi connection. This opens up AR as a platform for complex 3D models that mobile hardware wouldn’t usually be able to render, for example a model that involves high numbers of polygons, complex lighting, materials and shaders, high resolution textures, and potentially complex physics.

So what are the benefits?

It looks better

Quite simply, this process raises the bar for the visual quality, and even the interaction available, for AR – opening it up as a more useful tool capable of showing highly realistic models, in turn making the experience more immersive.

Stream to multiple devices

With a central PC streaming the graphics over WiFi, it’s entirely possible to stream the same feed to multiple devices without the need for multi-networking, essentially allowing multiple users to see and interact with the same session. This could be useful in all sorts of ways, from expanding AR’s gaming potential to serving as a sales tool, for example an AR car configurator that both the salesperson and the potential buyer can see in real time together.

Practical

This process can make it practical for modellers to preview their assets without having to build out a whole app or push it to the app store. Instead, using PC rendering, they can stream their models to a device and drop it into the real world much more easily, making the process of creating models for AR or even VR more efficient.

Smaller app sizes

With the onus of rendering assets removed from the device, the overall app size will come down, making it both more appealing for people to download and enabling you to have more fun with your experience without being restricted by max file size limits for Apple or Android.

Easier to update

If your app’s content is streamed from a central PC and the mobile app effectively works as just the viewer, then you don’t have to update the app itself if you need to make content changes. Instead, you can update via the central source which is quicker and easier.

Level playing field

Devices of differing levels of power and capability can all access the same graphical experience so long as they’re able to render a simple video feed, meaning you no longer have to own the most expensive smartphone to get the best experience.

What next?

At the moment, this process requires a good WiFi connection so currently it will be more feasible in setups where you can control the local WiFi network and how people connect to it, for example in a museum or car dealership, or at home for gaming purposes. However, with the upcoming arrival of 5G and Edge streaming, it’s entirely feasible this way of running complex AR experiences could become much more common practice in the future. In the meantime, we’re enjoying experiencing AR that looks really, really good.

Related posts